Recently I wrote about the challenges of event sourcing and CQRS in this article “What they don’t tell you about event sourcing”. A manager once told me “If you bring me a problem and don’t bring me the solution then you are the problem”. In light of this, I will address most of the issues I raised in the previous article and share my experience on how to tackle them. The topic of this article it’s arguably the hardest and most frustrating characteristic of distributed systems, eventual consistency.

Ben Horowitz once said that there are no silver bullets. But to eventual consistency there is, the holy grail to deal with eventual consistency, the one fits all solution, perhaps the greatest secret of our time:

…

*drum roll*

…

Do not use an eventually consistent model. Yeah, that’s it. If I was sitting next to you I’m sure I could hear your eyes roll, but bear with me.

One thing people often do is decide the technical implementation disregarding the business implications. As long as the solution complies with the requirements it is fine to use the sexiest, most hyped pattern or technology in the market.

Before jumping the gun on a decision like this you should sit down with the business and discuss the implications on the people using the system. Get first an idea of the quantity of the data, the performance and availability requirements, and whether or not your business can be eventually consistent. Be discerning and rigorous about the decision. Do you need to be eventually consistent? The quantity of data is often an excuse to use it. If your database fits inside a pen you don’t need big data. Just use your friendly neighborhood SQL or any other ACID technology. You are not just making the business life easier, you are making it easier by avoiding the extra complexity for every other developer who will have to maintain and increment on that system.

The first step for dealing with eventual consistency is not dealing with it at all.

It is crucial to understand where introducing eventual consistency will have an impact and where it won’t. Another important factor to take into account besides the users using the functionality are the consumers of that information. If another service depends on it to process a business rule, relying on an eventual consistency model will likely lead to erroneous results if the correct measures aren’t in place. Even when they are, the complexity of the consumer system will increase substantially.

Having said that, if your business is large enough the time will come when you will need eventual consistency. Sooner or later, when walking towards a microservice architecture, for example, eventual consistency will be needed due to all the distributed data models or due to availability constraints. It’s also fairly common when using an event-driven architecture due to the services reacting to those events which are intrinsically asynchronous.

Then the Sword of Damocles will hang over your head. How do you keep that horse’s tail hair from breaking you ask? Well here are some pointers:

The end to end argument is commonly mentioned in the context of computer networking and states that:

“…functions placed at low levels of a system may be redundant or of little value when compared with the cost of providing them at that low level.”

The same reasoning can be applied to a microservice architecture and can be especially useful when dealing with eventual consistency. Typically eventual consistency comes out due to the sheer scale of the data and due to performance reasons. This, however, doesn’t mean every single component in the architecture has to be eventually consistent, e.g. an eCommerce platform might have hundreds of millions of stock units, millions of products, and a handful of product categories. This implies the system to manage stock units would indeed benefit from an eventually consistent model or of an asynchronous message queue of some kind, on the other hand, the system that manages categories would hardly benefit from it. The user order flow will use all three of them along with several others. If the choice is made to make the stock information eventually consistent, the platform’s product search will also likely be eventually consistent, thus the stock information will be eventually accurate, e.g. a user can see stock on a product that doesn’t have stock. Nevertheless, as long as the checkout process has a way to validate the correct stock on the moment of the purchase it is possible to benefit from the eventually consistent model in that specific part of the architecture and the performance boost that comes with it.

The business process will most likely flow through several microservices and we can choose to make specific systems inside that flow eventually consistent, prioritizing performance where needed as long as there is a way to guarantee the correctness of the end to end flow.

Events are our most popular answer for reactive solutions and are the foundation for many microservice architectures, typically event-driven ones. However, they imply eventual consistency of some sort and the worst kind of eventual consistency: the one you choose to create. Why it is the worst kind? It’s the worst kind simply because you can’t blame it on some else obviously or on some shady database technology you are using for the first time.

The meaning of an event and how it is used can vary from system to system. The interaction between the publishing system and how the consumers handle the event has consequences throughout the whole microservice architecture. They usually imply eventual consistency due to the asynchronous queues between the domains. How to deal with this eventual consistency inside the system can be challenging. These usually patterns stand out:

Town Crier Events

This pattern uses events as simple notifications. The source system publishes an event notifying the consumers that something changed in its domain. Then the consumers will request additional information to the source system. E.g. if a user changes the door number of his address the system managing the user domain will publish a user address changed event with perhaps the id of the user and with the new door number. Using an approach with DDD in mind the events should reflect the intent of the user. But how many systems will be able to act on the door number alone without for example the remaining of the address? The consumers of the event will then have to request the source system for further information.

This approach provides a high level of decoupling between the domains. The user’s address information will only persist in the user domain. This allows for each domain to evolve seamlessly and independently from each other, along with the business needs with little impact to the consumers.

This is also the easiest and fastest approach to develop. The consumers don’t need to worry about ordering issues or persisting, initializing, and maintaining the data they need, they just request it.

This approach has its drawbacks, performance-wise there are better choices. The request to get the additional information can be avoided as I will explain below, although in most use cases a few additional HTTP calls aren’t an issue, there might be use cases where this is not an option.

The main issue and the main reason why this option should be seldom used is when you apply it to a larger scale. If this approach is used consistently throughout a microservice architecture you will have a complex spaghetti of HTTP calls and event flows that is very hard to understand and operate. The reasoning behind moving to a microservice architecture is to be horizontally scalable, so you can effectively scale a small part of the system that has a higher load. If this pattern is taken too far you will find that scaling a microservice will negatively impact other parts of the system. To deal with the additional throughput it will be needed to scale that specific microservice, but the scaling will produce a higher amount of HTTP calls to other microservices that might also require scaling of their own to deal with the additional load. Those microservices on the other hand will do additional HTTP calls to other microservices and so on. You will find that instead of a microservice architecture you have a distributed monolith and you don’t have horizontal scalability.

This pattern is still useful, used sparingly.

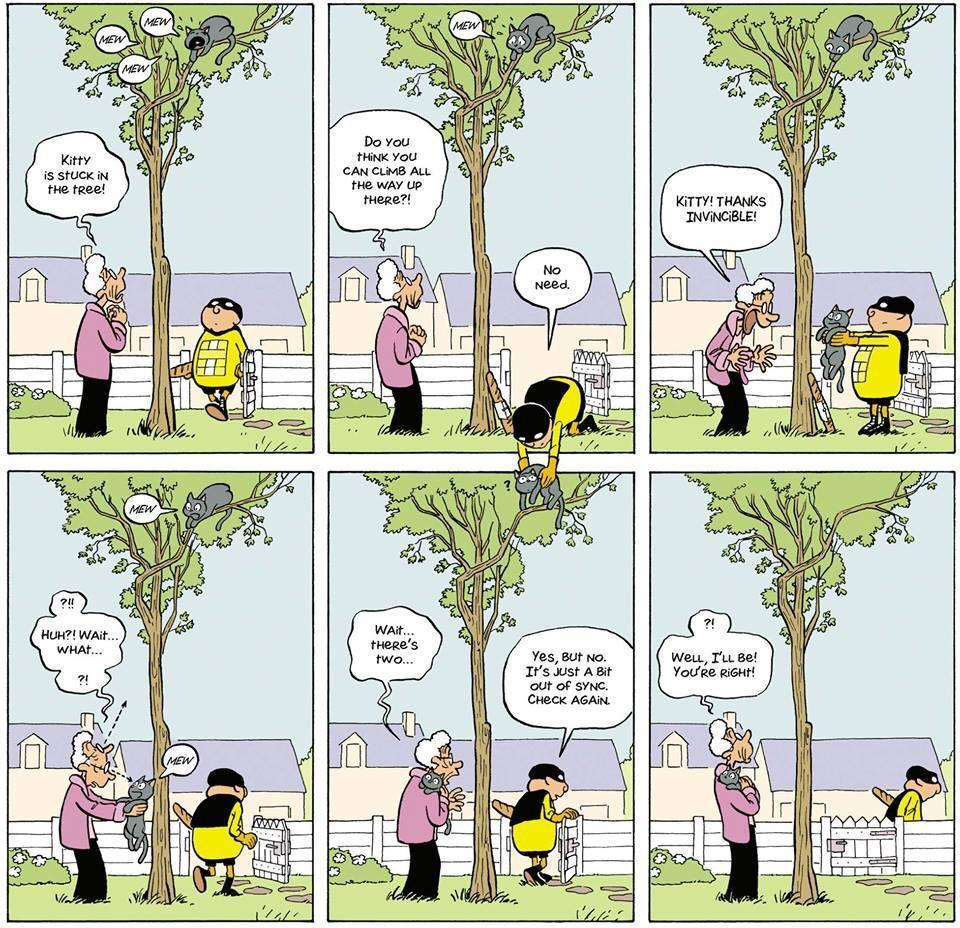

Bee Events

Bees do not intentionally carry out pollination, it is the unintended result of the bee’s travels. Pollen clings to the bee’s body and is rubbed off as the bee walks or flies from one blossom to another.

Using the door number event example I used in the last pattern, instead of requesting the source system for additional information, it is possible to save the data internally as a materialized read model. E.g. if the consumer needs the user and his address then it would listen to the UserCreatedEvent and save internally the relevant data when the address changes it would do the same. Then when the door number changed the consumer had internally the data it needed and no external dependency or HTTP call would be needed. Applied throughout the architecture many of the microservices will have a materialized view of the data relevant to that service, thus the bee comparison.

This has a better performance than the pattern before since accessing internal data is always faster than a remote HTTP call. It would also avoid external dependencies and the issue with the distributed monolith I mentioned before.

The drawbacks are obvious, a copy of the original system data will be scattered throughout each microservice. Although not a full copy but just a view of the relevant data for each microservice. Disk space is cheap we often hear, we hardly hear it from tech ops people though. The main issue isn’t the disk space, it is the initialization, maintenance, and keeping that data accurate. When a new consumer joins most likely will have to do an initial migration and will have to maintain the data updated and consistent.

When a bug occurs on the source system usually is propagated to all consumers and the fix is more complex than simply fixing the source system, it usually involves fixing all the consumers and all of their data.

Also when the source system needs to change the schema of the data it likely needs to be aligned with all the different consumers and whether or not they need to adapt. Involving complex operation between several databases that require effort from several different teams. The system is decoupled due to the events but there is a silent coupling everyone tends to miss that can be dangerous and can impact future developments.

This pattern shines the most on specific use cases that depend on a large number of partial events or when high performance is a must. Still should be used even more sparingly than the last one due to the reasons I mentioned.

Denormalized Event Schema

According to Eric Evans and DDD, events should be designed to reflect the user’s intent. This way events reflect what really happened. Adding event sourcing makes even more sense since the event stream will be a historical record of what happened, it will have business value being both the source of truth for the domain and an audit of the aggregates. More than an audit it will represent the very business process and the process flow through time.

That being said this rule should never be the first choice to design the schema of events. Although valuable and theoretically valid, in practice it will lead to increasingly complex architectures. The two patterns I mentioned before are a symptom of this problem that can easily be avoided if we take the time to understand the need behind each event. Instead of translating the user intent to the event schema understand the needs of your consumers and their use cases. This should be the rule of thumb when designing event schema. Using the same example as before, a user changes the door number of his address, first, we should understand which consumers will use the event and what is the use case behind that need. Perhaps the event is needed by the fraud system to understand if it is a valid address and the address doesn’t belong to a blocked country. In that case, a UserAddressChanged event containing the full address of the user would be more useful than an UserAddressDoorNumberChanged event that would only contain the door number. That way the consumers would have all the information they need without requesting anything to the source system and without saving any internal metadata, thus avoiding all the issues with the approaches I mentioned before. More information means the event is also more flexible to future use cases and new consumers.

I find it to be quite similar to the reasoning behind moving from normalized to denormalized read models. It is the main argument behind most NoSql databases to optimize the model for reads having it materialized the way the user needs and avoiding following a normalized third normal form model. Instead of each event sending unique information, they might repeat some of the information to facilitate the consumer.

The decision comes down to either choosing small, intent-driven events or larger denormalized events. Smaller events have the advantage of having a lower load on the message broker and overall better performance due to serialization and network latency. Larger events facilitate the consumers and smooth the path to less complex architectures.

For most use cases it’s best to use denormalized events rather than smaller ones. The consumers should be able to make the decisions they need using only the information in their domain and the information in the event. This will contain the dependencies between each service and enable a truly decoupled scalable architecture.

Eventual consistency doesn’t need to be slow. You should design and tune your system to have an inconsistency window as small as possible. Understand the load of your services and design them to process in (near) real-time. On an event-driven architecture that would mean not to have lag on the event consumption of the services feeding your read models for example. You do have to understand when the load peaks, how the system will behave in those situations, and manage expectations accordingly. Designing your services to be horizontally scalable and automatic scaling them when that occurs can be a good alternative.

Domain Segregation

In the specific case of event-driven architectures, having services depending on the information of other services using DDD and separating the events on internal and external to the domain can be a good choice. The external events will only be published when that change is reflected throughout that domain. Other domains will react to the external event and when consulting information that specific change will already be reflected. Imagine the scenario where there is a product domain and an order domain, each domain has a set of microservices with eventual consistent read models within them. The creation of a given product event would only be published when that product would be persisted throughout the product domain and only then an external event would be published. Then the order domain would react to that event and wouldn’t be the risk that the product domain responding with 404.

Several strategies became popular recently, most of them easy and straightforward, however, they are based on awfully wrong principles and should be avoided at all costs.

Fake strong consistency: It is possible to feed the UI with the information sent in the command. Assuming the changes eventually will reflect on the read model once the command is processed. I do this to my wife all the time, saying I’m cleaning the house while I’m actually writing articles and postponing the cleaning time. This assumes the command will succeed which should never be an assumption. It can fail due to transient issues (network or otherwise). Also, a DDD domain can and should reject a command that fails the domain’s validation.

Poll the read model: instead of the complexity and resource overhead of polling you should notify interested parties (p.e. the UI) that changes were done and update as needed. In an event-driven architecture this is solved naturally.

Block until changes are done: blocking the UI if there is a need to not advance until all changes are applied. This can add a lot of unnecessary complexity if it is only handled on the UI since it doesn’t guarantee concurrent users. It can also use a distributed transaction or lock which you should never do. Two-phase commit could also be an option but also a bad one. If the use case doesn’t support eventual consistency don’t go around it, just don’t use it and make the flow strongly consistent for that operation.

Read the writes: it is possible to return the resulting entity once the command is applied instead of fetching it from a read model. This actually can be an option if the write model isn’t fully async (there are synchronous requests and responses and not a queue in the middle). It can turn into a constraint if you need to scale the write and read models independently.

Eventual consistency has dire impacts on the architecture and local implementations, it’s up to you to leverage it so you don’t fall trap to its complexity.

It is also a consequence of the success of your application, learning to embrace it it’s perhaps what will eventually carry it forward.