Although we’re told a picture speaks a thousand words, that cliché seriously underestimates the value of a good image. Our understanding of how the world works is simplified by our ability to turn data into images. Imaging is at the heart of science: if it can be measured, it will be turned into an image to be analyzed.

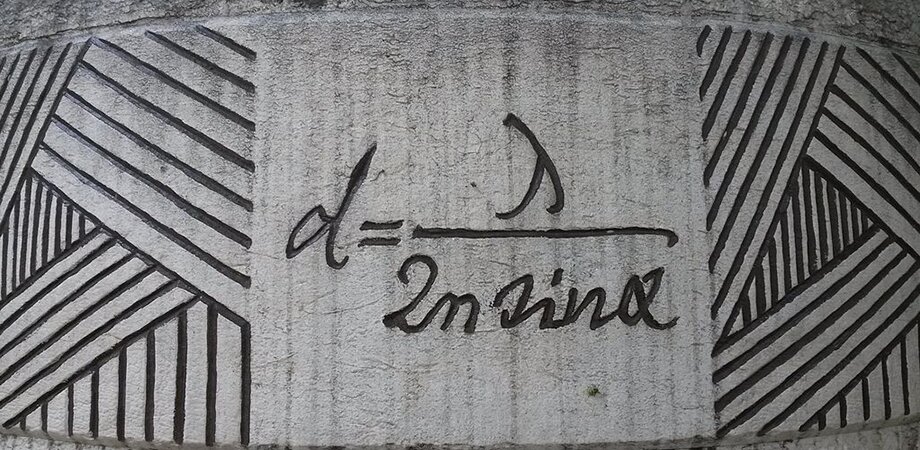

The limiting factor of imaging is resolution. How close can two objects be before an imaging system reduces them to a single blurred spot? That question was initially answered by Ernst Abbe in 1873. He theorized that if two objects are closer than about one-half wavelength, they cannot be resolved. Abbe obtained his limit by considering how diffraction by a lens would blur a point source.

For about a century, Abbe’s diffraction limit was taken as a scientific truth. Sure, you could play some games with the illuminating light and the imaging medium to get a factor of two or three, but factors of ten or 100 were inconceivable. That has now changed, with many imaging techniques able to resolve beyond Abbe’s diffraction limit, which now lies shattered in the corner of science’s workshop beneath the shadow of two Nobel prizes. But is there still a limit? How close can two objects be before they blur into a single spot? This is the question that Evgenii Narimanov from Purdue University has sought to answer in a recent Advanced Photonics paper.

Deblurring imaging

When it comes to imaging, it is a good deal simpler to set aside the concept of diffraction and think instead about information. When an object is imaged, light is scattered by the object towards the imaging system. The spatial pattern, or information, of the image is carried by the spatial frequencies of the light. To recover an accurate image, the imaging system must transmit those spatial frequencies without modifying them. But, every system has its limits, so the contribution of some spatial frequencies will be lost.

The resulting image is made by recombining the spatial frequencies that are transmitted by the imaging system. If the imaging system cannot transmit frequencies above a certain limit, then the image will not contain that information, resulting in blurring. If you consider the imaging system as able to transmit spatial frequencies up to a cutoff frequency, but unable to transmit frequencies above the cutoff, then the resulting image resolution will be exactly as predicted by Abbe (but with simpler mathematics).

Indeed, the information transmitted by an imaging system is described by exactly the same maths as used by engineers studying the transmission of data down telephone wires, which allows the tools of information theory to be used to predict the performance of imaging systems.

Decoding the messages in an image

Narimanov has gone a step further in abstracting the imaging process by only considering information transfer, independently of how that information is encoded. When that is done, the resolution of an image is determined only by the mutual information shared between the object and the image. In this framework, which is independent of all functional details, the resolution limit is given by the noise introduced during information transfer. In practice the noise is due to the detector, light scattering, fluctuations in illumination conditions, and many more details.

By taking this abstract approach, Narimanov was able to produce a theory that predicts the best possible resolution for an image based only on the ratio between the strength of the signal and the amount of noise. The higher the signal-to-noise ratio, the better the possible resolution.

Leveraging this theory, the paper also includes a number of calculations for more specific imaging systems, such as those that use structured illumination, and for the case of imaging sparse objects, which have few features often clumped together. The possibilities for improving an image with postprocessing is also discussed: we are all familiar with television crime dramas that seem able to enhance images at will. Although this is not possible as shown on TV, there is an element of truth. Valid methods for computationally postprocessing an image can reveal some hidden details. This theory shows the limits of that approach, too.

Narimanov’s approach does not reveal which aspect of a system currently limits the resolution. For that, more specific models are still required. Instead, it is better to think of Narimanov’s model as a guide: where are the biggest gains in resolution to be found for the least effort? That information is useful when deciding where to invest time and money.

Hyperbolic metamaterials enable nanoscale ‘fingerprinting’

Evgenii Narimanov. Resolution limit of label-free far-field microscopy, Advanced Photonics (2019). DOI: 10.1117/1.AP.1.5.056003

Citation:

Blurry imaging limits clarified thanks to information technology (2019, November 8)

retrieved 8 November 2019

from https://phys.org/news/2019-11-blurry-imaging-limits-technology.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.